Cut Mobile Robot Dev Time in Half

Developers first started using ROS (Robot Operating System), now known as ROS1, because it eliminated the need for proprietary robotic programming expertise. But they discovered that ROS1 couldn’t support groups—or swarms—of mobile robots.

This inability is due to limitations in real-time programming, reactivity, and low latency, all of which are needed for accurate robot control. For instance, a fleet of autonomous mobile robots in a smart factory must be able to work together to select, grasp, and move items while keeping out of one another’s way. Such tasks are impossible without real-time computer vision processing.

ROS2 provides significant API changes to ROS1. This enables the operating system to support a wider range of computing environments, take advantage of technology that’s incompatible with ROS1, and accommodate real-time computer vision programming. Finally, developers could in theory use an open source middleware for swarm robotics.

But one issue still prevents many developers from building commercial mobile robots with either form of ROS. The ROS open source community does not have the capacity to offer a warranty and stand behind the robots that people build on the operating system.

This created an opportunity for ADLINK, which responded with the ROS2 autonomous mobile robot controller: ROScube. It’s a ROS2-optimized development platform that comes with a warranty, providing customers with a solution that integrates and simplifies hardware and software. And by offering a library of algorithms and software, it cuts programming time in half.

“In traditional robot development, programming is the most time-consuming phase. The ability to cut that time by 50 percent means developers can test and refine their work much faster,” explained Bill Wang, Project Manager at ADLINK.

According to Wang, solution integrators using ROScube have gone from out-of-the-box to building a proof of concept (PoC) deployment in three months. They’re able to achieve this by using the algorithms that come with ROScube instead of having to program them on their own.

Developers also gain access to ADLINK for technical support and troubleshooting. And using hardware and software from a single entity simplifies the process of building mobile robots as everything works smoothly together.

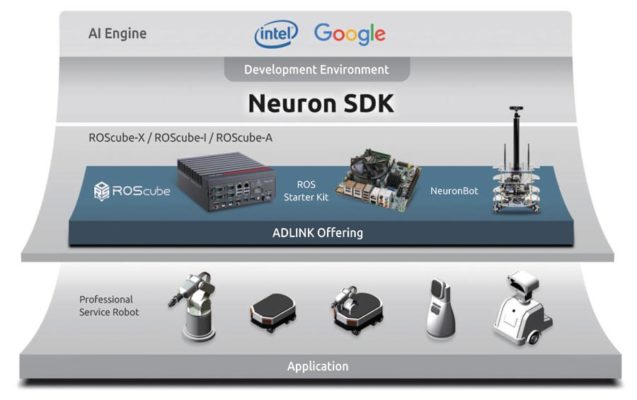

ROScube includes the @ADLINK_iot Neuron SDK for internal communication, as well as for vehicle-to-vehicle coordination necessary for swarm mobile robot applications.

A Look Into Robot Vision

ROScube includes the ADLINK Neuron SDK for internal communication, as well as for vehicle-to-vehicle coordination necessary for swarm mobile robot applications. The SDK also comes with a commercial version—versus open source—of the data distribution service (DDS) middleware to help ensure reliable, real-time communications. It also provides a collection of QoS resources, the NeuronLib API, a UI interface, fleet management software, plus ROS1 and ROS2 compatibility.

All of this gives developers support that’s lacking with robots built using open source community tools. The only skills they need are the ability to use common programming languages such as C, C++, and Python, and to possess familiarity with conventional operating systems like Linux. No deep robotics knowledge or expertise is necessary.

The Neuron SDK, ROScube, ROS Starter Kit, and NeuronBot support computational platforms for AI algorithms and inference needed for robot vision, object recognition, and training (Figure 1).

No Loss of Performance

Short development times do not mean sacrificing performance or capabilities. The ROScube works with technologies such as Intel® RealSense™ depth cameras and the Intel® Movidius™ VPU neural compute engine.

RealSense cameras enable autonomous mobile robots to compute color and distance information to perform localization and navigation. This is critical as such robots must be able to plan, execute, and alter their route and avoid collisions with moving and stationary objects.

The AI algorithm library contained in Movidius supports AI frameworks and neural networks, allowing mobile robots to learn and recognize objects and human faces. Both skills are important to deliver items and interact with objects—including other robots—and people.

Real-time communication among multiple mobile robots and other equipment is also accomplished through the ROScube controller, which comes equipped with a choice of Intel® Xeon® or Intel® Core™ processors in addition to a wide range of I/O ports. In this way, autonomous mobile robots built with ROScube can engage in a variety of scenarios, using computer vision.

Given that the ROScube is compatible with both ROS1 and ROS2, it enables businesses to develop whichever mobile robot type best suits their needs. In either case, the solution can shorten the time required to program robots, keep costs down, and raise the level of performance.