Fill form to unlock content

Error - something went wrong!

Stay up to date with the latest IoT and network edge news.

Subscribed.

Modular Machine Vision for the Industrial Edge

The AI landscape is changing, fast. Too fast, in most cases, for industrial vision systems like automated quality-inspection systems and autonomous robots that will be deployed for years if not decades.

If you’re a systems integrator, OEM, or factory operator trying to get the most out of a machine vision system, how do you future-proof your platform and overcome the anxiety associated with launching a design just months or weeks before the next game-changing AI algorithm or architecture might be introduced?

To answer this question, let’s deconstruct a typical machine vision system and find out.

The Anatomy of a Machine Vision System

Historically, industrial machine vision systems consist of a camera or optical sensor, lighting to illuminate the capture area, a host PC and/or controller, and a frame grabber. The frame grabber is of particular interest, as it is a device that captures still frames at higher resolution than a camera could to simplify analysis by AI or computer vision algorithms.

The cameras or optical sensors connect directly to a frame grabber over interfaces such as CoaXPress, GigE Vision, or MIPI. The frame grabber itself is usually a slot card that plugs into a vision platform or PC and communicates with the host over PCI Express.

Besides being able to capture higher-resolution images, the benefits of a frame grabber include the ability to synchronize and trigger on multiple cameras at once, and to perform local image processing (like color correction) as soon as a still shot is captured. Not only does this eliminate the latency—and potentially the cost—of transmitting images somewhere else for preprocessing, but it also frees the host processor to run inferencing algorithms, execute corresponding control functions (like turning off a conveyor belt), and other tasks.

In some ways, this architecture is more complex than newer ones that integrate different subsystems in this chain. However, it is much more scalable, and provides a higher degree of design flexibility because the amount of image-processing performance you can achieve is only limited by the number of slots you have available in the host PC or controller.

Well, that and the amount of bandwidth you have running between the host processor and the frame grabber, that is.

Seeing 20/20 with PCIe 4.0

For machine vision systems, especially those that rely on multiple cameras and high-resolution image sensors, system bandwidth can become an issue quickly. For example, a 4MP camera requires about 24 Mbps of throughput, which on its own barely puts a dent in the roughly 1 Gbps per lane data rates provided by PCIe 3.0 interconnects.

Gen4 PCIe interfaces double the #bandwidth of their PCIe 3.0 counterparts to almost 2 Gbps per lane, essentially yielding twice as many video channels on your #MachineVision platform without any other sacrifices. @SECO_spa via @insightdottech

However, most machine vision systems accept inputs from multiple cameras and therefore ingest multiple streams, which starts eating up bandwidth quickly. Add in a GPU or FPGA acceleration card, or two for high-accuracy, low-latency AI or computer vision algorithm execution, and, between the peripherals and host processor, you’ve got a potential bandwidth bottleneck on your hands.

At this point, many industrial machine vision integrators have had to start making tradeoffs. Either you add more host CPUs to accommodate the bandwidth shortage, opt for a backplane-based system and make the acceleration cards a bigger part of your design, or select a host PC or controller with accelerators already integrated. Regardless, you’re adding significant cost, thermal-dissipation requirements, power consumption, and a host of other obstacles embedded systems engineers are all too familiar with.

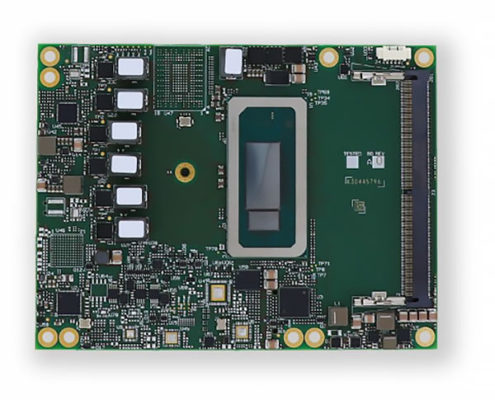

Or you could opt for a platform with next-generation PCIe interfaces, such as the CALLISTO COM Express 3.1 Type 6 module from the IoT-solution developer SECO (Figure 1).

With a 13th Gen Intel® Core™ processor at its center, SECO CALLISTO COM Express module supports a PCI Express Graphics (PEG) Gen4 x8 interface, up to two PEG Gen4 x4 interfaces, and up to 8x PCIe 3.0 x1 interfaces, according to Maurizio Caporali, Chief Product Officer at SECO. Gen4 PCIe interfaces double the bandwidth of their PCIe 3.0 counterparts to almost 2 Gbps per lane, essentially yielding twice as many video channels on your machine vision platform without any other sacrifices.

Caporali explains that the 13th Gen Intel® Core™ processor brings further advantages to machine vision, including up to 14 Performance and Energy (“P” and “E”) cores, and as many as 96 Intel® Iris® Xe graphics execution units that can be leveraged on a workload-by-workload basis to optimize system performance, power consumption, and heat dissipation. All of this is available in a 15W and 45W TDP, depending on SKU, and in an industrial-grade, standard-based SECO module that measures just 95 mm x 125 mm.

To make matters simpler, the platform is compatible with the OpenVINO™ toolkit, which optimizes computer vision algorithms for deployment on any of the aforementioned core architectures for maximum performance. CALLISTO users may also utilize SECO’s CLEA AI-as-a-Service (AIaaS) software platform—a scalable, API-based data orchestration, device-lifecycle management, and AI model deployment edge/cloud solution that allows machine vision users to improve AI model performance over time and update their endpoints over the air.

“CLEA is fundamental to manage AI applications and models to be deployed remotely in your fleet of devices. When the customer has thousands or hundreds of devices in the field, CLEA provides the opportunity for easily scalable remote management,” Caporali says.

Modular Machine Vision for the Industrial Edge

Creating an industrial machine vision solution is a significant undertaking in time, cost, and resources. Not only does it require the assembly of niche technologies like AI, high-speed cameras, high-resolution lenses, and specialized video processors, but these complex systems must deliver maximum value over extended periods to justify the investment.

One way to safeguard against this is by modularizing your system architecture so that elements can be upgraded over time. Not only would a machine vision platform architecture built around frame grabbers allow machine vision OEMs, integrators, and users to scale their video processing and camera support as needed, the modular architecture of COM modules—which plug into a custom carrier card—allows the same for the host PC or controller itself. So, with some careful thought and consideration, you will just need to upgrade the carrier board design for CALLISTO to meet the machine vision demands of the future, all thanks to a totally modular approach.

In short: no more anxiety for machine vision engineers.

This article was edited by Christina Cardoza, Associate Editorial Director for insight.tech.