IoT Virtualization Jump-Starts Collaborative Robots

The next evolution in manufacturing automation is being conceptualized around collaborative cobots. Cobots—a type of autonomous robot—are capable of laboring safely alongside human workers. But while their advantages seem obvious, the design of these complex systems is anything but.

Yes, most of the enabling technologies required to build a cobot exist today. And many are already mainstream, from high-resolution cameras that let robots see the world to multicore processors with the performance to locally manage IoT connectivity, edge machine learning, and control tasks.

The challenge is not so much the availability of technology as it is the process of bringing it all together—and doing so on a single platform in a way that reduces power consumption, cost, and design complexity. A logical starting point to achieve this would be replacing multiple single-function robotic controllers with one high-end module. But even that’s not so simple.

“Collaborative robots have to perform multiple tasks at the same time,” says Michael Reichlin, Head of Sales & Marketing at Real-Time Systems GmbH, a leading provider of engineering services and products for embedded systems. “That starts with real-time motion control and goes up to high-performance computing.”

“The increasing number of sensors, interactivity, and communication functionality of collaborative robots demands versatile controllers capable of executing various workloads that have very different requirements,” Reichlin continues. “You need to have these workloads running in parallel and they cannot disturb each other.”

This is where things start to get tricky.

IoT Virtualization and Collaborative Robots in Manufacturing

One of the benefits of multicore processing technology is that software and applications can view each core as a standalone system with its own dedicated threads and memory. That’s how a single controller can manage multiple applications simultaneously.

Historically, the downside of this architecture in robotics has been that viewing cores as discrete systems doesn’t mean they are discrete systems. For example, memory resources are often shared between cores, and there’s only so much to go around. If tasks aren’t scheduled and prioritized appropriately, sharing can quickly become a resource competition that increases latency, and that’s obviously not ideal for safety-critical machines like cobots.

How do you construct a multi-purpose system on the same #hardware that can safely share computational resources without sacrificing #performance? The answer is a real-time #hypervisor. @CongatecAG via @insightdottech

Even if there were ample memory and computational resources to support several applications at once on a multicore processor, you still wouldn’t be able to assign just one workload to one core and call it a day. Because many applications in complex cobot designs must pass data to one another (for example, a sensor input feeds an AI algorithm that informs a control function), there’s often a real need for cores and software to share memory.

This returns us to the issue of partitioning, or as Reichlin put it previously, the ability for workloads to run in parallel and not disturb one another. But how do you construct a multi-purpose system on the same hardware that can safely share computational resources without sacrificing performance?

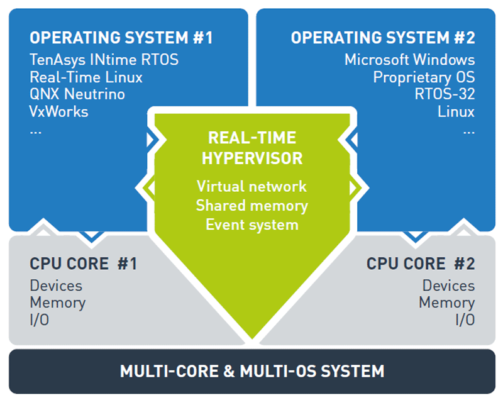

The answer is a real-time hypervisor. Hypervisors manage different operating systems, shared memory, and system events to ensure all workloads on a device remain isolated while still receiving the resources they need (Figure 1).

Some hypervisors are software layers that separate different applications. But to meet the deterministic requirements of cobots, bare metal versions like the Real-Time Hypervisor integrate tightly with IoT-centric silicon like 6th gen Intel® Atom and 11th gen Intel® Core™ processors.

The Atom x6000E and 11th gen Core families support Intel® Virtualization Technology (Intel® VT-x), a hardware-assisted abstraction of compute, memory, and other resources that enables real-time performance for bare-metal hypervisors.

“To keep the determinism on a system, you cannot have a software layer in between your real-time application and hardware. We do not have this software layer,” Reichlin explains. “Customers can just set up their real-time application and have direct hardware access.

“We start with the bootloader and separate the hardware to isolate different workloads and guarantee that you will have determinism,” he continues. “We do not add any jitter. We do not add any latency to real-time applications because of how we separate different cores.”

Data transfer between cores partitioned by the RTS Hypervisor can be conducted in a few ways depending on requirements. For example, developers can either use a virtual network or message interrupts that send or read data when an event occurs.

A third option is transferring blocks of data via shared memory that can’t be overwritten by other workloads. Here, the RTS Hypervisor leverages native features of Intel® processors like software SRAM available on devices that support Intel® Time-Coordinated Computing (Intel® TCC). This new capability places latency-sensitive data and code into a memory cache to improve temporal isolation.

Features like software SRAM are automatically leveraged by the Real-Time Hypervisor without developers having to configure them. This is possible thanks to years of co-development between Real-Time Systems and Intel®.

Hypervisors Split Processors So Cobots Can Share Work

The rigidity of a bare metal, real-time hypervisor affords design flexibility in systems like cobots. Now, systems integrators can pull applications with different timing, safety, and security requirements from different sources and seamlessly integrate them onto the same robotic controller.

There’s no concern over interference between processes or competition for limited resources as all of that is managed by the hypervisor. Real-Time Systems is also developing a safety-certified version of their hypervisor, which will further simplify the development and integration of mixed-criticality cobot systems.

Reichlin expects industrial cobots ranging from desktop personal assistants to those that support humans operating heavy machinery will become mainstream over the next few years. And most will include a hypervisor that allows a single processor to share workloads, so that the cobot can share the work.

This article was edited by Georganne Benesch, Associate Content Director for insight.tech.