Cloud-Native Development for the IoT Edge

IoT software is going cloud-native. What does that mean for legacy code?

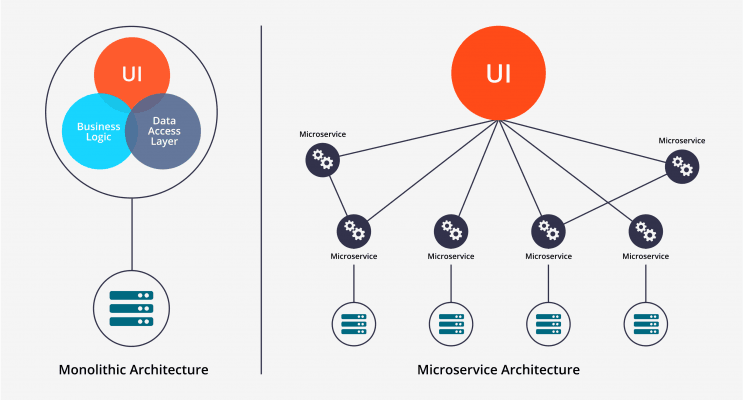

Let’s start by defining terms. “Cloud native” methods break an application into a series of microservices (Figure 1). Because cloud infrastructure is homogeneous, these microservices can be ultra-portable. As a result, they can be deployed almost anywhere and updated on the fly.

Cloud-native engineering offers greater scalability, agility, and opportunities for software reuse. It also makes life easier for the engineer. Small services are simpler to develop, manage, and maintain than a single large application.

At the Intersection of Enterprise and Embedded

But cloud-native methods are at odds with legacy embedded code. Embedded code is typically static, and tailored to a specific platform. It is tested meticulously to ensure safe, secure, reliable, and deterministic operation.

Combining legacy and cloud-native code is no easy task. Consider the problems with certification.

“A safety-critical application needs to be hosted on top of a safety-critical environment,” said Paul Parkinson, Field Engineering Director at Wind River. “All underlying software needs to be developed to at least the same safety integrity level as the most critical application.”

Cloud-native applications run on non-deterministic Linux, and have many hidden dependencies. They often can’t be certified to standards such as IEC 61508, ISO 26262, or DO-178C.

Even if they could, the cost of certification “is proportional to the number of effective source lines of code (eLOC) used in the system.” For reference, the Linux kernel alone consists of more than 25 million lines of code.

Cloud-Native or Safety-Certified?

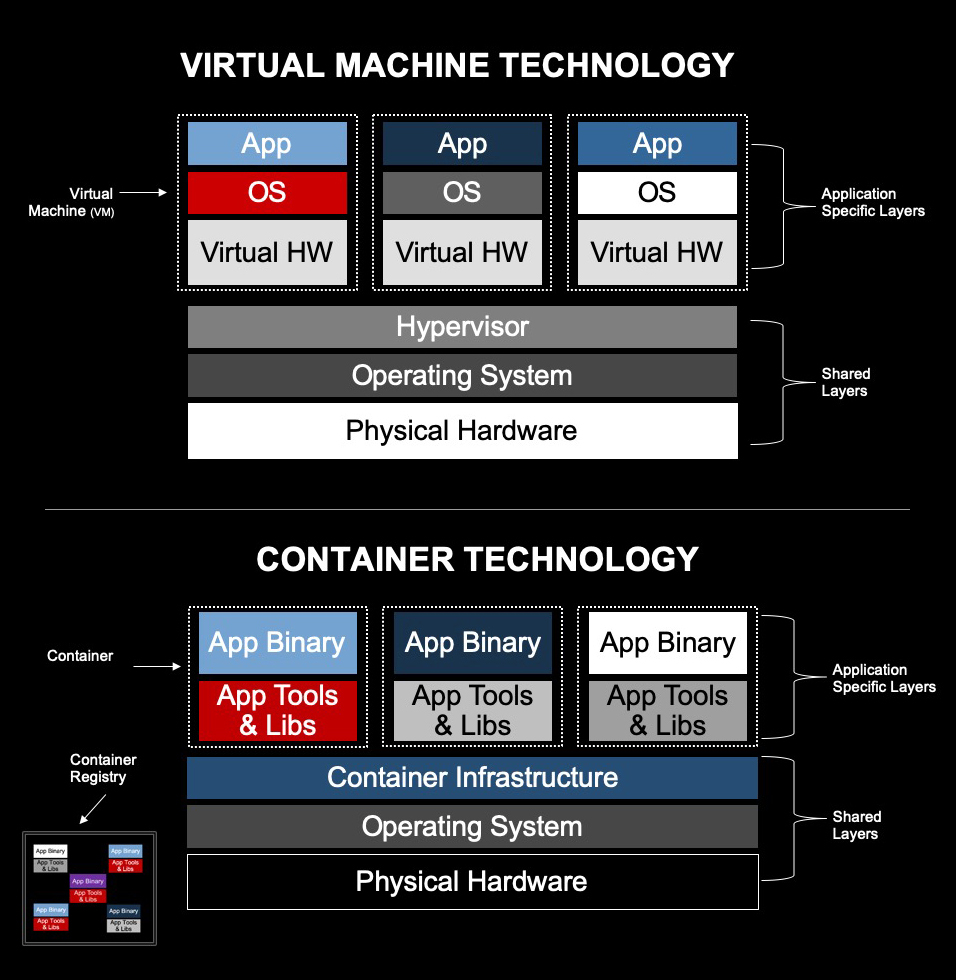

The differences between cloud-native and embedded engineering may be irreconcilable, but that’s okay. They can still be combined through virtualization and containerization.

As shown in Figure 2, virtualization runs multiple OSs on a hypervisor layer. Containerization relies on a single OS instead. This allows a container to be lighter, as it contains only an application and its dependencies.

Containers are foundational to cloud-native design, while virtualization has been favored for embedded design. But the two approaches can be combined with the right hypervisor.

Specifically, a Type 1 “bare metal” hypervisor can provide the determinism that embedded systems require. Because Type 1 hypervisors run directly on the hardware, they have full access to system resources, which allows them to be efficient and reliable.

Just as important, they can be used to partition safety-critical and non-safety-critical functions.

“The ability to host safety-critical functions and general-purpose functions with no safety requirement on a real-time operating system platform using partitioning is well-proven,” says Parkinson. “Using hardware virtualization takes this to another level.”

With safety-certified hypervisors, for example, it’s possible to deploy cloud-native analytics on a real-time controller. With this approach, cloud-native features can be readily added to existing embedded designs. Because the original code is unchanged, certification costs can be minimized, and determinism maintained.

But there is a bigger picture to consider. Going cloud-native can be the foundation of digital transformation (Video 1).

This transformation can already be seen in the merging of IT with operational technology. In fact, 50 percent of OT providers will partner with IT companies on IoT offerings by 2020.

Of course, all of this depends on having a virtualization platform that also supports cloud-native applications. The good news is that the latest hypervisors are designed to do just that. “A lot of experience has been gained through the development, certification, and deployment of safety-critical systems in recent years,” Parkinson said.